The Next Generation Image Factory

Building VM images for glance is essential for a secure and reliable cloud platform based on OpenStack. Public cloud operators face extra challenges, since users demand for up-to-date, secured, and maintained images. Unfortunately, no convenient framework exists to flexibly build a wide variety OS images for OpenStack based clouds. The aim of our agile transformed Images squad (previously called the Image Factory) is to transparently develop, build & support hardened and patched OpenStack glance machine images (Linux/Windows) to be used for instances and bare-metal servers on the Open Telekom Cloud (OTC) public and hybrid clouds. The images are also certified with the respective vendors wherever necessary.

In this article, we describe our efforts toward building an Image Factory as a Service (IFaaS) that builds rapidly and automatically OS images for several OpenStack environments in the Open Telekom Cloud (OTC). This is necessary to account for different hardware configurations like GPU- or FPGA-controllers that are tricky to bake into the images.

The Legacy Image Factory

As demonstrated in the OpenStack Boston Summit 2017, the classical Image Factory very effectively and transparently builds hardened and patched images to be used for Open Telekom Cloud (OTC) instances and bare-metal servers. The images are published along with their build logs, test results, signatures, and package list to be used by the customers and possibly under other cloud environments.

Pros

- Uses standard open source build tools (Kiwi and Disk Image Builder)

- Comprehensive set of scripts available

- Developed and tested for 2+ years

- Supports most of the Linux distros

Cons

- The build environment is very static

- Build environment is not easily reproducible

- Scripts need experience and care to run

- cron jobs are used for periodic builds

- Trending/Stats not available

- Windows Image build support missing

The Next Generation Image Factory

Major architectural changes have been done in the Image Factory area to work toward a disposable and reproducible image build environment. Harnessing the Infrastructure-as-code (IaC) capabilities of the cloud, a Terraform template deploys the bits and pieces needed to build images.The two main entities are the Jenkins server and the NFS server. The Jenkins instance, NFS server and the Jump servers are configured on the first boot and all the needed Jenkins jobs are automatically created out of a git repository. The images are built on temporary build hosts. Those build hosts are spawned on-demand, used to build the image, and later deleted unless the requester chose to keep them. This makes sure that we have a clean and standard environment every time we build an image. That way the amount of resources used to build images are also minimized. The image builds do rely on some static pre-existing infrastructure including several repository mirror servers, a git repository, LDAP slaves for directory services, and KMS. All the build steps are implemented in a Jenkins pipeline that includes cloning from git sources, building, registering under glance, testing, and signing the image with a GPG key. The host that is used to build the image is added as a temporary slave under Jenkins and is disposed off after the image build process is completed.

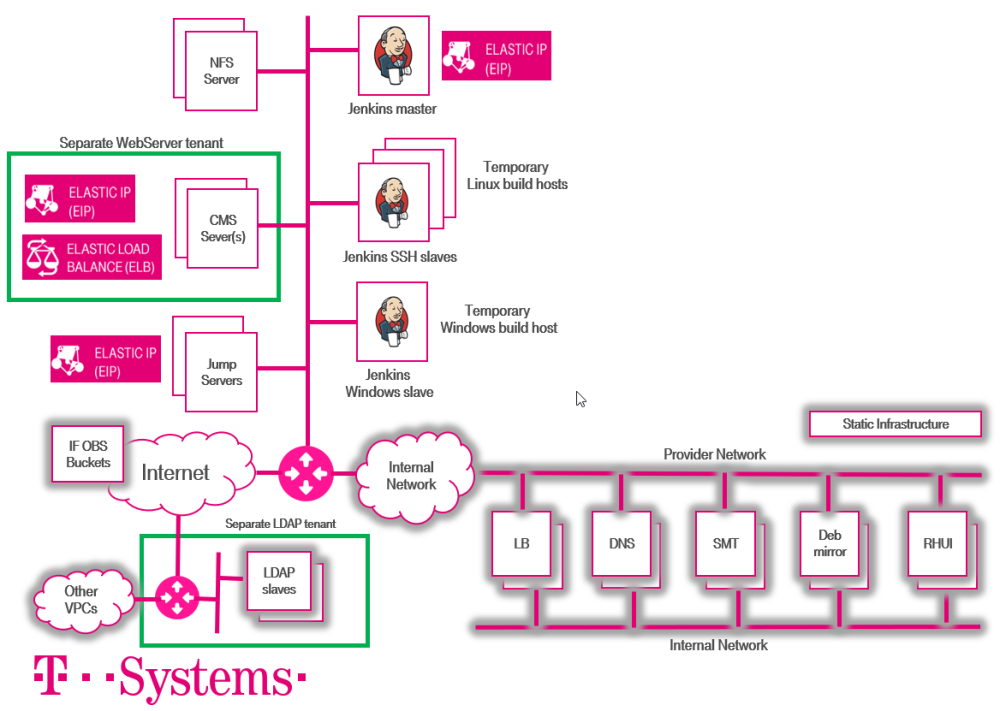

The overall architecture of the next generation Image Factory is shown below in figure 1.

1. Overall architecture of the next generation Image Factory

At the heart of the next generation Image Factory is the Jenkins master which is the starting point for triggering all the Jenkins jobs. The NFS server provides a shared storage for the temporary build hosts and the resulting artifacts including image qcow2 files reside there. There are jump servers to get into the environment. The WebServers are hosted in a separate tenant as well as the LDAP slaves. The LDAP slave infrastructure let the admins and the users of the Image Factory login to various machines using their LDAP accounts. The OBS buckets used for the images are hosted in a production tenant. As previously mentioned, the build process also relies on static infrastructure that includes the DNS, repository severs, and Windows KMS.

Pros

- Self-service Jenkins pipeline

- Users need not to touch/access the scripts

- Transient build hosts (Jenkins SSH slaves) provide added security

- Resource utilization minimized

- Lots of images can be built in parallel

- Images accessible by the customers over OBS via the new CMS

- Reproducible templated Infrastructure (Terraform based)

- Statistics on build times/trends

- NFS server’s storage utilization minimized

- Email alerting directly from Jenkins to job/OS owner with team in CC

- Parts of the process could be handed over the Operations

- Windows Image build to be supported as well

Cons

- Considerable development and testing effort

- New infrastructure to be managed especially Jenkins

- New skills needed

Build Pipeline

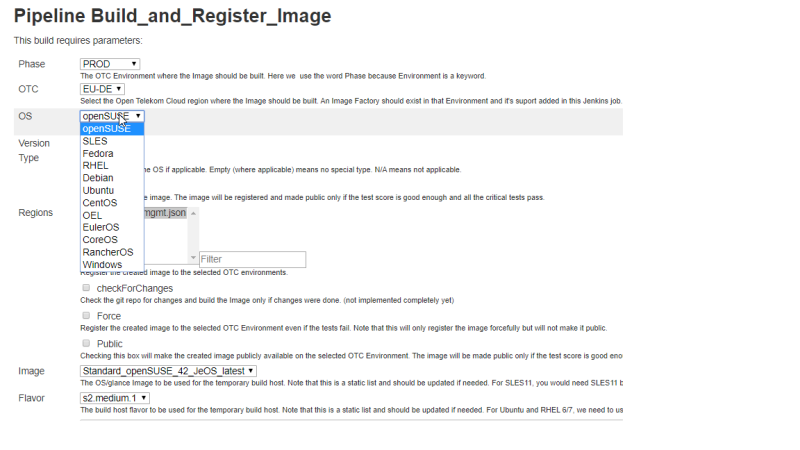

The image build pipeline is a self-service parameterized Jenkins job that lets the user build a specific Linux OS (Windows builds are yet to join the process). Some main parameters are shown below.

2. Image build pipeline parameters

The build pipeline comprises of the following stages that are run one after the other.

- Initialize & Start iperf3 on master

- Spawn Build Host

- Add Jenkins Slave

- Git Checkout & Check

- Configure Slave

- Run Prepare & Import GPG Key

- Build Image

- Register as Private Image

- Perform Tests

- Sign Image

- Hashdeep

- Upload to other Regions

- Set publish Image request

- Perform Clean-up

- Destroy Build Host

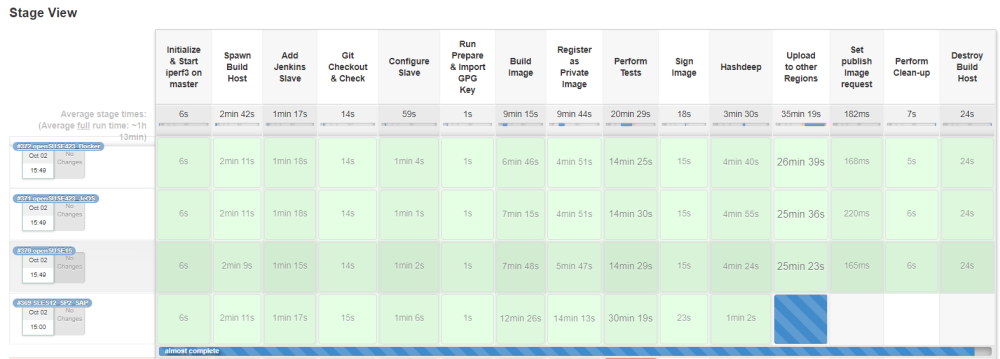

3. Various stages of the build process

Stage#1 initializes some parameters and starts iperf server on the Jenkins master node, if not running already. The iperf server is needed for performing some network performance related tests in a later stage. Stage#2 creates a temporary build instance to be used to build the image. Stage#3 adds the created build instance as an SSH slave to the Jenkins master. The rest of the stages with the exception of the last two clean-up stages run on the temporary SSH slave. Stage#4 checks out the sources from git which include the Kiwi and Disk Image Builder descriptors needed to build the images. There is an optional check that is not yet 100% implemented which aims on checking for changes and abort the build if there are no changes done on the image to be built. Stage#5 configures the SSH slave by installing the required packages depending on the OS flavor. Kiwi and Disk Image Builder packages are installed along with some other needed packages in this step. Stage#6 prepares the environment for the actual image build process, e.g., here the various names and directories are evaluated. Stage#7 invokes the Kiwi or Disk Image Builder scripts to build the image and convert it to qcow2 format if needed. Stage#8 registers the built image as a private image under the subject tenant. Stage#9 performs tests on the registered private image. This spawns a temporary instance based on the private image that was registered in the earlier stage. The test suite comprises of various scripts that validate the image so that it is production ready. Since we let the users download the built images and relevant files for verification, Stage#10 signs the image qcow2 file, test results and some other files using our GPG key to show that the image files are actually coming from us. Stage#11 processes the qcow2 file and computes the audit hashsets. These hashes are computed for security reasons incase we suspect that the original image was compromised. The image is built and tested in one master environment and is registered to various other regions. Stage#12 registers the private image to the other regions. Stage#13 sets the publishing trigger to make the image public in various regions. This is needed because we use a different workflow to make the images public. That workflow is needed because the glance admin API is not publicly reachable. Stage#14 performs some clean-up on the build machine which is eventually deleted in Stage#15.

The build logs can be found under the Jenkins console and detailed logs could be found on the NFS server. The initial version of pipeline was one big monolithic groovy code that worked well but to have a better organization and especially to reuse some parts of the code in other pipelines, we use the concept of shared library in Jenkins. This has been proven to be very useful.

Credits

The next generation Image Factory is in action and publishing images starting from Jan 2019. It would be unfair not to appreciate the efforts of the founders of the legacy Image Factory. The on-demand deployable next generation Image Factory heavily relies on the scripts developed under the legacy Image Factory. Therefore we would like to especially thank Kurt Garloff and Daniela Ebert for their excellent work. Thanks are also due to Roman Schiller for introducing the shared library concept and Sabrina Mueller for her great job in moving the pipeline building process to production and for implementing the shared library concept.